For years, India’s sports story has been defined by passion, perseverance, and potential. From the narrow lanes where children learn to play barefoot to the grand arenas where national teams compete, one thing has remained constant — talent has never been India’s weakness. What’s often missing is structure, continuity, and precision. And this is exactly where technology becomes the game-changer.

In modern sport, technology isn’t an accessory — it’s the glue that connects every part of the ecosystem: the athlete, the coach, the fan, and the business behind it. For Indian sports to scale from sporadic brilliance to consistent global performance, technology must move from being an afterthought to being at the core of development.

Why Technology Matters in Sports

Technology in sport is no longer limited to scoreboards and replays. It now shapes every aspect of the sporting journey — from training and injury prevention to fan engagement and talent discovery.It helps athletes understand themselves better, helps coaches make smarter decisions, and allows fans to connect more deeply. In essence, technology bridges the distance between effort and excellence.For a country like India — young, digital, and rapidly urbanizing — integrating technology into sports isn’t just desirable; it’s inevitable.

The Digital Transformation of Global Sports

Across the world, technology has redefined how sport is played, managed, and consumed.

- Performance analytics now turn every movement into measurable data.

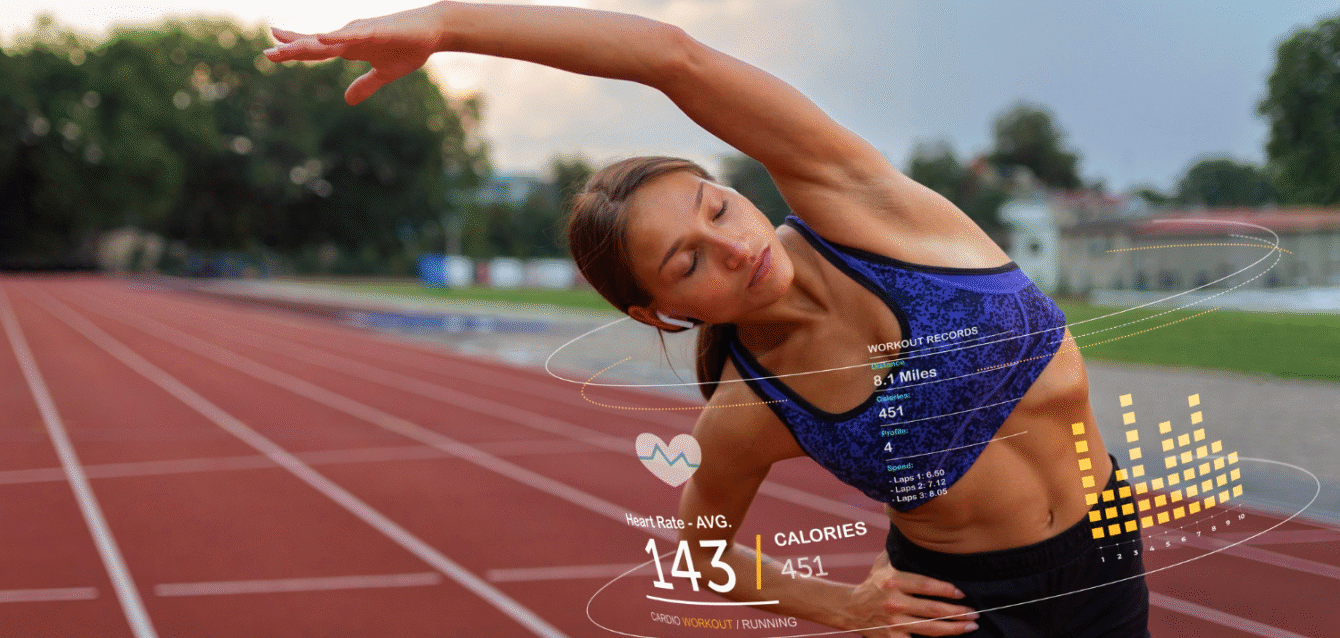

- Wearables track heart rate, recovery, and fatigue in real time.

- Video analysis tools break down every action for improvement.

- Virtual reality (VR) allows athletes to simulate competition conditions.

- AI and machine learning predict injury risks, tailor training, and optimize nutrition.

Global leagues and academies have embraced data-driven culture to build smarter teams and more resilient athletes. India, with its deep talent pool and expanding digital infrastructure, is perfectly positioned to ride this next wave — but only if innovation reaches every level, from elite centers to grassroots.

Where India Stands Today

India’s sports technology ecosystem is in its early but exciting phase. In the past five years, over 200 startups have entered the space — building solutions in fitness tracking, sports analytics, esports, coaching platforms, and fan engagement.The growth is being driven by three forces:

- Digital penetration: With over 850 million internet users, even rural and semi-urban regions are now connected.

- Fitness culture: A new generation values health and performance, creating demand for data-driven insights.

- Sports commercialization: Leagues and clubs increasingly see analytics and fan-tech as key to revenue and brand growth.

From cricket and badminton to athletics and kabaddi, teams are beginning to use technology for scouting, recovery, and tactical analysis. But the real potential lies beyond the professional tier — in democratizing access to these tools across the country.

Tech for Athletes: The New Edge

At the heart of every successful athlete today lies information — accurate, actionable, and timely.

1. Data-driven training

Wearables and performance-tracking devices can turn every practice session into insight. They monitor speed, heart rate, and endurance levels, helping coaches personalize routines rather than rely on instinct.

A sprinter, for instance, can analyze stride length, acceleration, and muscle fatigue to adjust technique. A badminton player can track movement heatmaps to improve agility. When such feedback becomes routine, performance evolves faster and more sustainably.

2. Sports science and biomechanics

Technology-driven sports science allows athletes to understand the mechanics of movement — how joints, muscles, and energy systems interact during play. Motion-capture systems, pressure sensors, and high-speed cameras make it possible to refine skills with scientific precision.

As more Indian academies adopt biomechanical labs and analytics-based feedback systems, the difference between local and international training standards will narrow rapidly.

3. Injury prevention and recovery

Injury has ended countless sporting careers prematurely. With AI-based monitoring and predictive algorithms, coaches can identify overuse patterns before injuries occur. Recovery technologies like cryotherapy, compression therapy, and wearable sensors that monitor muscle recovery are now within reach of professional and semi-professional setups.

This proactive approach doesn’t just save careers; it extends them.

Tech for Talent Discovery

One of India’s biggest challenges has always been identifying and nurturing talent consistently. Technology can change that story.

Imagine a unified digital talent platform where schools, clubs, and academies upload performance data, video clips, and statistics. AI could then analyze and rank athletes across disciplines and regions. Scouts could access verified profiles of thousands of young players with just a few clicks.

Such systems already exist in countries like the UK and Japan, where every athlete — from school level upward — is digitally mapped. If adopted widely, India could ensure that no potential star goes unnoticed simply because of geography or limited exposure.

Tech for Coaches: Smarter Decisions, Stronger Outcomes

Technology doesn’t replace coaches — it empowers them. Analytics platforms can evaluate athlete progress objectively, reducing bias and guesswork.

Video analysis allows side-by-side comparisons between training and competition, making it easier to identify technical gaps. Cloud-based dashboards can track multiple athletes across regions, helping federations plan development programs better.

Most importantly, technology can train the trainer — offering certification courses, knowledge-sharing platforms, and AI-driven coaching aids that reach even remote areas. A well-equipped coach in a district town can now access the same analytical insights as one at a national training center.

Tech for Fans and Engagement

Fans are the emotional engine of sports, and technology has revolutionized how they participate.

Live-streaming, fantasy gaming, and social engagement platforms have created immersive experiences that were unthinkable a decade ago. Data visualizations and AR-based highlights make matches interactive.

For smaller sports and regional leagues, digital reach is a lifeline — enabling them to build fan bases and attract sponsorships even without traditional broadcasting.

This connectivity between fans and athletes doesn’t just boost viewership; it creates community. It turns spectators into stakeholders, keeping interest alive beyond match days.

Sports Businesses and Startups: India’s Next Innovation Frontier

The intersection of sports, technology, and entrepreneurship is emerging as one of India’s most dynamic frontiers. Startups are developing AI-based performance trackers, affordable sensors, and virtual coaching platforms tailored to Indian conditions.

Some focus on gamified fitness for children, while others build community engagement tools for local leagues. The affordability and accessibility of these solutions are crucial — they allow grassroots athletes, schools, and local academies to benefit from innovation that was once reserved for elite professionals.

As investment flows into the sector, sports technology could become a standalone industry contributing billions to the broader sports economy — much like fintech did for finance.

Bridging the Digital Divide

While innovation is booming, access remains uneven. Urban academies and professional teams have embraced data analytics, but many rural and public institutions still rely on outdated methods. The next phase of transformation must focus on inclusivity — taking technology to the grassroots.

Affordable wearable devices, mobile-based training apps in regional languages, and cloud-based coaching platforms can make tech adoption truly democratic. When digital solutions reach rural schools and small-town athletes, the true revolution begins.

The Future: Connected, Data-Driven, and Human

The beauty of technology in sport lies in its paradox — it makes the game more scientific but also more human. By providing clarity, feedback, and opportunity, it allows athletes to understand themselves better and compete smarter.

India’s sporting story is at a pivotal moment. The passion is there, the talent is endless, and the audience is ready. What’s needed now is the invisible glue — technology that binds everything together: from playgrounds to analytics dashboards, from community fields to global stages.

As India enters its next sporting decade, the question isn’t whether technology will shape its future — it’s how fast the country can embrace it.

Because in sport, as in life, those who adapt faster always move ahead.